DevOps Architecture

DevOps architecture brings together teams from development and operations to make the software delivery process faster and more efficient. By breaking down the barriers between these departments, it allows for quicker time-to-market, better collaboration, and improved software quality.

DevOps is everywhere these days. If you’ve been even remotely tuned into the tech world, you’ve definitely come across it. Unless you’ve been living under a rock in the realm of software and web development, you’ve likely heard at least a little about it. You might know it as “the link between development and operations,” but do you truly grasp its essence?

Ultimately, DevOps helps companies ensure they can roll out quality-tested, user-approved software frequently and consistently, whether for internal use or a broader audience. To achieve this, businesses embracing DevOps have ditched older, clunkier development models in favor of what’s arguably the most agile approach to software release.

Companies adopting DevOps architecture have discovered how to churn out software releases more efficiently and rapidly. In the upcoming article, we’ll delve into what exactly DevOps architecture entails and how it could be a game-changer for your enterprise.

Why DevOps?

The next crucial question to address is why DevOps is so essential.

In previous project delivery methods, we encountered several issues:

- The development and operation teams operated in isolation, failing to collaborate effectively.

- The sequential process of design, build, test, and deployment led to prolonged delivery times.

- Without DevOps, team members dedicated significant time to designing, testing, and deploying instead of focusing on project development.

- Manual code deployment resulted in human errors during production.

- The coding and operation teams followed separate timelines, leading to synchronization issues and additional delays.

7 Cs of DevOps architecture are depicted below :

- Continuous Development

- Continuous Integration

- Continuous Testing

- Continuous Deployment/Continuous Delivery

- Continuous Monitoring

- Continuous Feedback

- Continuous Operations.

DevOps Methodology

DevOps is a methodology designed to enhance workflow throughout the software development lifecycle. Visualized as an ongoing loop, it encompasses key steps: planning, coding, building, testing, releasing, deploying, operating, monitoring, and, crucially, incorporating feedback to continually refine the process. This approach takes into account all necessary elements for successful outcomes, including people, processes, and technology. It emphasizes the following critical considerations:

- Team Dynamics: Ensuring alignment with the project mission and effective cloud management.

- Connectivity: Providing access to public, on-premise, and hybrid cloud networks as needed.

- Automation: Implementing infrastructure as code and scripting for efficient resource orchestration and deployment.

- Onboarding Procedures: Streamlining the process for initiating projects in the cloud environment.

- Project Environments: Establishing standardized environments such as staging, development, and production for consistent deployment and testing.

- Shared Services: Offering common capabilities and resources across the enterprise for increased efficiency.

- Naming Conventions: Utilizing standardized naming conventions to facilitate resource tracking and billing management.

- Role Definitions and Permissions: Clearly defining roles within teams and assigning appropriate permissions for resource access based on job functions.

DevOps Principles

When you have an architecture that empowers small developer teams to independently implement, test, and deploy code into production swiftly and securely, you can significantly boost developer productivity and enhance deployment outcomes. A crucial aspect of service-oriented and microservice architectures is their composition of loosely coupled services with well-defined boundaries. One notable framework for modern web architecture rooted in these principles is the twelve-factor app.

Randy Shoup highlighted:

“Organizations employing such service-oriented architectures, like Google and Amazon, enjoy remarkable flexibility and scalability. Despite having tens of thousands of developers, these organizations witness exceptional productivity from small teams.”

In numerous organizations, testing and deploying services prove to be challenging tasks. Rather than overhauling everything at once, we advocate for an iterative approach to enhancing the design of your enterprise system. This approach, known as evolutionary architecture, acknowledges that successful products and services will necessitate architectural adjustments over their lifespan to accommodate evolving requirements.

A valuable strategy in this regard is the strangler fig application pattern. Here, you gradually replace a monolithic architecture with a more modular one by ensuring that new development adheres to service-oriented architecture principles. Initially, the new architecture may rely on the existing system. However, as more functionality migrates to the new architecture, the old system gradually fades away.

Product and service architectures are in a constant state of evolution. Determining what should be a new module or service involves iterative decision-making. When evaluating whether a piece of functionality should be transformed into a service, consider if it exhibits the following characteristics:

- Implements a single business function or capability.

- Operates with minimal dependencies on other services.

- Can be developed, scaled, and deployed independently.

- Communicates with other services using lightweight methods like message buses or HTTP endpoints.

- Can be implemented using various tools, programming languages, or data stores.

Transitioning to microservices or a service-oriented architecture brings about organizational changes. Steve Yegge’s platform rant underscores several critical lessons learned from this transition:

- Metrics and monitoring become vital, and addressing escalations becomes more challenging due to the interconnected nature of services.

- Internal services can lead to Denial of Service (DOS) issues, necessitating measures like quotas and message throttling.

- The lines between QA and monitoring blur, necessitating comprehensive monitoring that tests business logic and data.

- Service discovery mechanisms become essential for efficient system operation in the presence of numerous services.

- The absence of a universal standard for running services in a debuggable environment makes debugging issues in other services more complex.

Best Practice Tools in DevOps

Collaboration, automation, and continuous integration and delivery are key components of the DevOps methodology. Organisations rely on a variety of strong tools to successfully execute DevOps. Here are a few best practises tools that are essential to building a successful DevOps phase:

1. Git: Git is a crucial component of contemporary software development. It is a well-liked distributed version management system that is free and open-source. Teams can easily manage many branches, collaborate effectively, and track changes to their code thanks to Git. It is essential for DevOps teams since it can save source code and offer a clear history of project development.

2. Jenkins: For CI/CD, Jenkins is a crucial tool. It provides a robust ecosystem of plugins, making it highly adaptable to different development environments. Teams can find and repair errors early in the development cycle because to Jenkins’ automation of the build, test, and deployment processes.

3. Docker: The distribution and deployment of apps have been completely changed by Docker. Applications and their dependencies are packaged into a single unit via containerization, making them portable and simple to administer. Docker guarantees consistency across a range of environments, removing the infamous “works on my machine” problems.

4. Selenium: A popular testing framework for web applications is Selenium. It enables programmers to build trustworthy and dependable automated tests that guarantee the effectiveness and quality of online applications. Selenium is a good option for DevOps testing due to its multi-browser interoperability and cross-language support.

5. Puppet: Puppet automates the administration of infrastructure code and is a potent configuration management solution. It enables DevOps teams to design infrastructure as code, simplifying the provisioning and consistent management of resources. The automation features of Puppet speed up software delivery while retaining security and dependability.

6. Ansible: Ansible is a well-liked configuration management solution that is renowned for being straightforward and user-friendly. Its declarative operation makes it perfect for automating the deployment and configuration of applications. The management of extensive and varied infrastructure is made easier by Ansible’s agentless architecture.

7. Kubernetes: Kubernetes is a well-known open-source technology for container orchestration. It offers automation for containerized application deployment, scaling, and management. Developers may abstract away infrastructure concerns with Kubernetes, facilitating easy deployment across many settings.

8. Terraform: For managing infrastructure as code, Terraform is a useful tool. For deploying resources across diverse cloud services, it offers a consistent CLI. For DevOps teams, Terraform is a must-have solution since its automated provisioning helps prevent human error and assures a precise workflow.

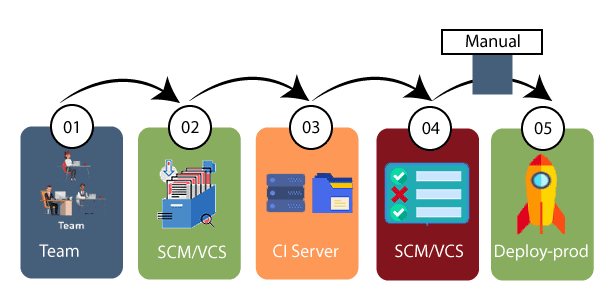

Lifecycle of DevOps

1) Continuous Development

This phase involves the planning and coding of the software. The vision of the project is decided during the planning phase. And the developers begin developing the code for the application. There are no DevOps tools that are required for planning, but there are several tools for maintaining the code. There are no DevOps tools required for planning, but many version control tools are used to maintain code. This process of code maintenance is called source code maintenance. Popular tools for source code maintenance include JIRA, Git, Mercurial, and SVN. Moreover, there are different tools for packaging the codes into executable files, such as Ant, Gradle, and Maven. These executable files are then forwarded to the next component of the DevOps lifecycle.

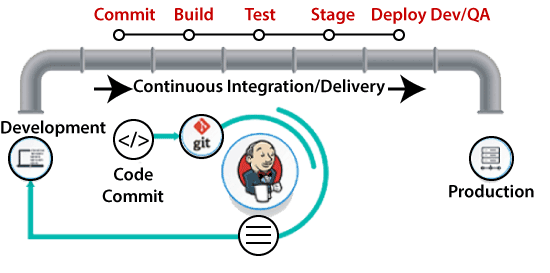

2) Continuous Integration

This stage is the heart of the entire DevOps lifecycle. It is a software development practice in which the developers require to commit changes to the source code more frequently. This may be on a daily or weekly basis. Then every commit is built, and this allows early detection of problems if they are present. Building code is not only involved compilation, but it also includes unit testing, integration testing, code review, and packaging.

The code supporting new functionality is continuously integrated with the existing code. Therefore, there is continuous development of software. The updated code needs to be integrated continuously and smoothly with the systems to reflect changes to the end-users.

Jenkins is a popular tool used in this phase. Whenever there is a change in the Git repository, then Jenkins fetches the updated code and prepares a build of that code, which is an executable file in the form of war or jar. Then this build is forwarded to the test server or the production server.

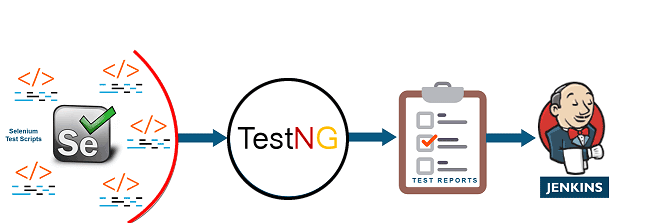

3) Continuous Testing

This phase, where the developed software is continuously testing for bugs. For constant testing, automation testing tools such as TestNG, JUnit, Selenium, etc are used. These tools allow QAs to test multiple code-bases thoroughly in parallel to ensure that there is no flaw in the functionality. In this phase, Docker Containers can be used for simulating the test environment.

Selenium does the automation testing, and TestNG generates the reports. This entire testing phase can automate with the help of a Continuous Integration tool called Jenkins.

Automation testing saves a lot of time and effort for executing the tests instead of doing this manually. Apart from that, report generation is a big plus. The task of evaluating the test cases that failed in a test suite gets simpler. Also, we can schedule the execution of the test cases at predefined times. After testing, the code is continuously integrated with the existing code.

4) Continuous Monitoring

Monitoring is a phase that involves all the operational factors of the entire DevOps process, where important information about the use of the software is recorded and carefully processed to find out trends and identify problem areas. Usually, the monitoring is integrated within the operational capabilities of the software application.

It may occur in the form of documentation files or maybe produce large-scale data about the application parameters when it is in a continuous use position. The system errors such as server not reachable, low memory, etc are resolved in this phase. It maintains the security and availability of the service.

5) Continuous Feedback

The application development is consistently improved by analyzing the results from the operations of the software. This is carried out by placing the critical phase of constant feedback between the operations and the development of the next version of the current software application.

The continuity is the essential factor in the DevOps as it removes the unnecessary steps which are required to take a software application from development, using it to find out its issues and then producing a better version. It kills the efficiency that may be possible with the app and reduce the number of interested customers.

6) Continuous Deployment

In this phase, the code is deployed to the production servers. Also, it is essential to ensure that the code is correctly used on all the servers.

The new code is deployed continuously, and configuration management tools play an essential role in executing tasks frequently and quickly. Here are some popular tools which are used in this phase, such as Chef, Puppet, Ansible, and SaltStack.

Containerization tools are also playing an essential role in the deployment phase. Vagrant and Docker are popular tools that are used for this purpose. These tools help to produce consistency across development, staging, testing, and production environment. They also help in scaling up and scaling down instances softly.

Containerization tools help to maintain consistency across the environments where the application is tested, developed, and deployed. There is no chance of errors or failure in the production environment as they package and replicate the same dependencies and packages used in the testing, development, and staging environment. It makes the application easy to run on different computers.

7) Continuous Operations

All DevOps operations are based on the continuity with complete automation of the release process and allow the organization to accelerate the overall time to market continuingly.

It is clear from the discussion that continuity is the critical factor in the DevOps in removing steps that often distract the development, take it longer to detect issues and produce a better version of the product after several months. With DevOps, we can make any software product more efficient and increase the overall count of interested customers in your product.

Leave a Reply